At some point over the past couple of years, you have no doubt sighed to yourself “not another talk, blog, marketing publication about AI”. This one is different!

While many in the cybersecurity industry are talking about integrating AI into their defensive cyber systems there is far less discussion about how offensive cyber agents are going to change our landscape. It is naive to discount the possibility that we’re going to see the same acceleration with offensive cyber that we’re seeing in other fields. In this post I’m going to talk about the current state of play, what is likely being developed behind closed doors, and what this will look like when it becomes widespread.

Let’s look at how we should be preparing for it.

I believe that we are in a “grace period” before we see widespread use of offensive AI rapidly accelerating the speed of compromises. We should be using our time to make sure we are prepared for it. With the human removed from the loop, tools will be able to operate, exploit, and pivot at much greater speeds than current attacks, and our existing defensive practices will struggle to deal with it. Perhaps worst of all, it’s very hard to predict when this will hit: it could be tomorrow, it could be next year, or it could be the next decade. While we can’t say for certain, it’s likely to happen during your career. Does that make you think differently?

Background

Google invented contemporary transformer-based LLMs (like ChatGPT) in 2017, but held back from launching because they were afraid it would damage Search. OpenAI, with a more flexible startup culture and less to lose, took Google’s work and stunned the public with ChatGPT in November 2022. Easy to use APIs instantly made integration with all manner of software products trivial.

These models are all built upon the fundamental technology of neural networks, a method of AI inspired by how neurons work in the brain. A small project called AlexNet in 2012 showed that the mass parallelisation needed for these kinds of models could be efficiently deployed to Graphics Processing Units (GPUs) .

Over the intervening years DeepMind developed these neural networks to breathtaking heights, with breakthroughs like AlphaGo, AlphaZero, and MuZero demonstrating that neural networks could learn strategic reasoning, a skill previously thought to be uniquely human. What began as pattern recognition had evolved into agents capable of planning and complex-problem solving. Academia and researchers rapidly adopted these tools, fuelling scientific discovery and excitement.

Offensive Cyber Agents

When this technology is fully applied to offensive cybersecurity we’re looking at autonomous agents that will perform compromises thousands of times faster than humans. Remember when you had that Ivanti or Fortinet zero day that took several hours to patch? That’s too slow.

Research falls broadly into two buckets: foundational research that seeks to advance fundamental understanding without immediate practical application, and applied research that builds on those foundations to solve real-world problems. Foundational research and technology often stop-starts in unpredictable peaks and valleys, but applied research is an incremental march of consistent refinement. Why I think fully automated compromise may be close is because I believe the foundational technologies for full-stack offensive cyber tooling already exist, and just need applied research to get us there.

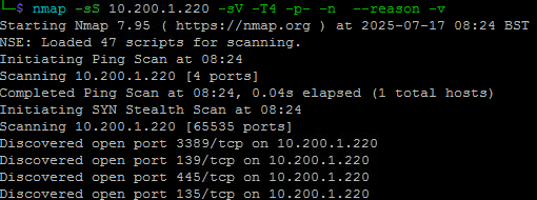

A large part of human-operated hacking takes place using command line tools. This depends slightly on the user’s preferences around tooling and the type of system being targeted, but for most non-web attacks command line tools are the go to for offensive cyber. LLMs have shown their ability to excel at written language and this is amongst the most impressive achievements of current neural networks. Crucially, this specific type of interaction (issuing structured, text-based commands and interpreting system output) is similar to programming, a domain where LLMs have shown surprising fluency.

However, many have tried it and it has become clear there are challenges. LLMs struggle with long-term memory, reliable state tracking, and understanding nuanced system context, all of which are critical for executing multi-step operations over time. They may generate plausible commands, but lack the robustness to adapt dynamically when output deviates from expectation or when tools return ambiguous or system-specific errors.

LLMs also have fundamental problems that may limit them.

They are currently consuming an ever increasing amount of compute for what seem to be marginal gains (although new models like DeepSeek suggest there may still be efficiencies to come). While they have mastered language, they struggle with simple reasoning and fail at tasks like counting R’s in “strawberry”. Researchers are trying to overcome these problems, but there is a lot of debate whether LLMs are “the future” or whether they’re just a cool trick before we develop in another direction.

Outside of LLMs, other neural networks are progressing, but they tend to be hyper-focused on a single task. We have seen they can perform well in complex incomplete information environments. In the long term, giving neural networks (or whatever comes after) direct access to the network stack (“here’s an IP header, here’s a TCP handshake, have fun”) is a realistic yet terrifying prospect.

This is where we are with AI in general, with an increasing number of tools, libraries, and APIs making it easier than ever to apply AI to different problems. As a response, we’ve been inundated with many new AI products over the past couple of years, with the primary public focus being tools that feed existing data into LLMs and provide a presentation layer on top of it.

Why hasn’t AI owned us yet?

Where we have seen confirmed AI used in the wild for offensive cyber they tend to be limited to a specific part of the attack chain: LLMs writing phishing emails or Deep Fakes being used for social engineering, dynamic malware obfuscation, and command line tools with hyper-focused domains such as privilege escalation or SQL injection. But there are also early signs of some offensive cybersecurity AI frameworks that are showing promise over multiple parts of the attack chain. So what’s holding it back?

Reason 1 – It’s hard

Try to play chess against an LLM and you will quickly see a problem: it will begin with expert level openings but soon descend into making impossible moves as the system has no knowledge of what state the board is actually in.

Command line hacking commands have to be entered perfectly or they will not carry out their intended actions. Large-scale or enterprise-wide compromises are rarely a single step and at a minimum likely require reconnaissance, exploitation, lateral traversal, and privilege escalation. That’s just to get a foothold before you begin to exfiltrate or encrypt data, embed persistence, or do whatever else you want to do once you are in. Even those individual stages are not simple: “learn privilege escalation” is not a single problem, since privesc on a Windows system looks nothing like privesc on Linux, and similar differences exist for almost every step.

From an AI perspective, each of these is a discrete task that is understood in isolation. However, these steps need to be carried out sequentially, often switching back and forth as new parts of the environment are discovered. Current non-LLM neural networks are normally built to solve a single challenge, while LLM’s show mastery of language but their context and state fall apart as complexity and ingested data increase.

This is a hard problem regardless of whether you are trying to solve it with a generalist system like an LLM or by chaining together a set of task-specific models. Most approaches fall apart on multi-stage reasoning, and combining that with a brittle understanding of system state makes full-chain automation a nightmare.

Even without full automation, incremental improvements in just one or two stages of the chain can significantly reduce the time it takes to breach a target. Faster recon, better exploit selection, or smarter lateral movement can all shorten the window defenders have to respond, which makes even partial advances in offensive AI dangerous.

Reason 2 – The market

When LLMs first opened their APIs there was a rush of developers trying to apply them to offensive security, with limited success due to the reasons outlined above. Additionally, out-of-the-box LLM models have built-in protections designed to prevent malicious use, creating another small speedbump for quick deployment of would-be offensive developers. It quickly became clear that attempts to develop offensive agents would require serious dedication of time, effort and cost that few could afford. Technologies were quick to develop that superficially looked impressive, but when carrying out a complete compromise chain, small errors escalate into unsolvable problems. Despite this, there are several players in the field who are all motivated to develop successful models.

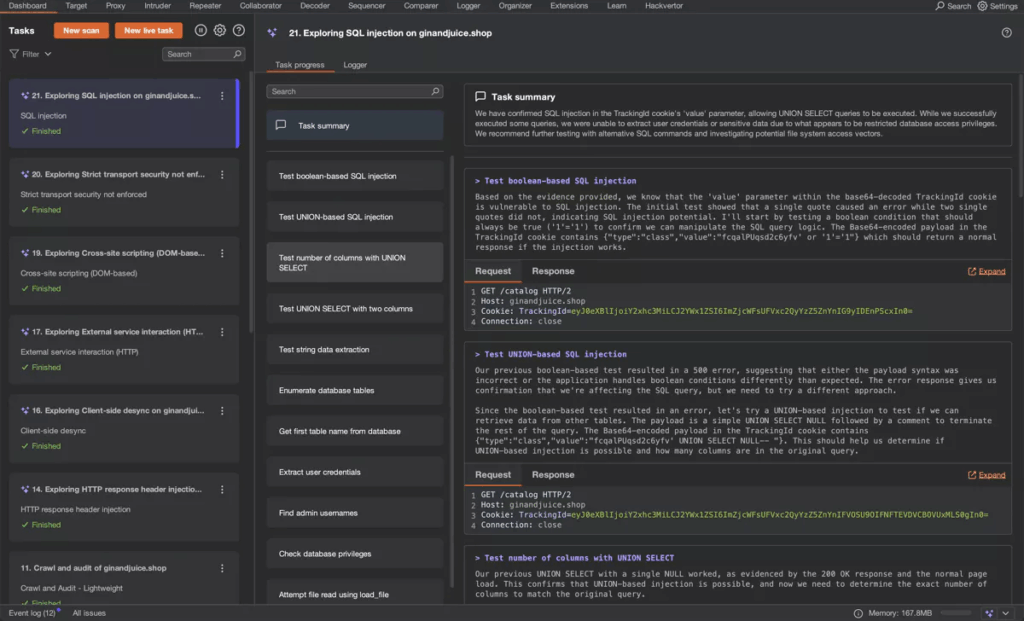

Red teams and cybersecurity vendors might invest in deep, expensive research, but most focus on quick-to-market applications that deliver immediate business value. Few maintain large, experienced research teams dedicated to genuine innovation. While some promising AI-driven web application scanners exist, they remain limited to that narrow context. Certainly many are claiming AI capabilities and it makes good marketing material, but scratch the surface and most of it is wrapper code around LLM APIs or improved report generation. A few are pushing toward chaining real attacks, but most of what we’ve seen is focused on automating recon, evidence collection, or simulating known threats. Ultimately these platforms are designed to help defenders understand risk and provide assurance rather than burn down networks, so any tooling they have produced tends to identify vulnerabilities but stop short of exploitation.

Cybercrime, for its part, is usually about scale and speed, not novelty. Most groups run a handful of tried-and-tested chains, often starting with phishing, then escalating to ransomware or extortion. They’re creative in delivery, but the underlying techniques are well-established, and their innovation usually comes from adapting public tools rather than inventing new ones. That said, they’re fast followers, and if a vendor builds something they can use, expect to see cracked or reengineered versions in the wild within weeks.

There’s also academia. It’s not a for-profit market, but it remains a valuable source of innovation. Academic research tends to focus on narrow, well-scoped problems, and while it’s not driving end-to-end offensive AI, it has laid the foundations for many of the techniques others are now building on. These papers don’t arrive as prebuilt tools but often become the scaffolding for more applied work, particularly when published openly.

The real research, though, is almost certainly happening behind closed doors. Nation-states, particularly those with advanced signals intelligence agencies, have a long history of running years ahead of public understanding. Cryptography is the classic example: whether it’s public key crypto, elliptic curves, or quantum resistance, our intelligence agencies have time and time again proved that they have been a step or two ahead. Even outside of crypto, let’s not forget that EternalBlue – the exploit behind WannaCry and NotPetya – was an NSA exploit that gave point and click access to the majority of Windows hosts worldwide.

If a state actor were first to deploy autonomous cyber agents, we may not even know: not because it hasn’t happened, but because the best operators are stealthy by design. Unlike ransomware gangs who benefit from noise, nation-states often pursue persistence and discretion.

So what currently exists?

Most of the current wave of offensive AI is built upon LLMs. Unsurprisingly, LLMs are particularly effective in social engineering attacks such as phishing, where their strength in language generation can be used to craft convincing, tailored messages at scale. Tools have emerged that go far beyond basic email generation: some combine LLMs with real-time LinkedIn scraping and classification models to identify high-value individuals like executives or security staff, generate context-aware phishing pretexts, and even simulate interactive conversations through voice synthesis. The integration of deepfake audio and real-time personas has already been exploited in documented fraud cases.

Some of the most promising progress may be in using LLMs for web application scanners, rapidly finding SQL, XSS, or authentication vulnerabilities, agnostic to the web tech stack being used. LLMs are also proving useful in the recon and vulnerability discovery stages of the attack chain with tools that help pivot between data sources and summarise findings. Social engineering tools can even map social graphs or infer organisational structure, greatly speeding up target profiling. Meanwhile, in vulnerability discovery, both general-purpose LLMs and specialist models are being used to audit source code, highlight risky patterns, and guide fuzzing engines.

We’re also beginning to see experiments with autonomous or semi-autonomous exploit generation. While fully automated 0-day development remains out of reach, proof-of-concept tools have shown that LLMs can contribute meaningfully to the exploit development pipeline, for example by selecting and adapting payloads or wrapping Metasploit modules based on environment fingerprinting. Elsewhere, threat actors are exploring AI-generated evasion techniques: polymorphic loaders, regenerated scripts, and dynamically obfuscated code all aim to frustrate traditional detection mechanisms. These aren’t theoretical risks and the tools are already circulating in cybercrime communities, even if most are crude or built on older models. As capabilities improve and tooling becomes more modular and accessible, the barriers to entry for sophisticated offensive operations are likely to decrease.

What happens next?

All of these tools show promise at one part of the attack chain; successfully chained together the speed of compromise will accelerate to levels our current defensive practices cannot deal with. With expensive 24/7 SOCs or MSSPs you’ll typically still have at best, SLAs of 15 minutes, far slower than automated attacks may need. Zero-day vulnerabilities will continue to be a challenge, as patching itself often takes hours, and that’s assuming a weekday with on call network engineers and emergency change boards. Over the past few years we’ve seen many on network edge devices such as Fortinet and Ivanti and I personally saw several cases where vulnerabilities were exploited but the network intrusion didn’t go any deeper because attackers were overwhelmed with options. With heavily automated attack chains, total environmental compromise could be near instantaneous.

Two things currently limit the speed of offensive cyber: 1) the knowledge of the attackers, which takes time and dedication to learn, and 2) how quickly they can work, having to track information over hundreds of different systems and services to target those that are most likely to yield results. Both are overcome as we automate more of the attack chain, and there are few inherent time sinks present in compromises (examples like UDP port scanning or password cracking stand out as exceptions, but they are typically optional steps). I see the dirty insides of a lot of networks, and it’s rare that there aren’t critical exploitation pathways inside, they just take time to find. If that time is reduced to near zero (in human terms), many of our current practices will not suffice.

What makes this eventual outcome likely is that we already have the foundational technology to get there, we simply need to apply it to the specific domain of offensive cyber. Don’t get me wrong, “simply” is doing some heavy-lifting and this will require large well-funded research projects, but given the stakes at play sooner or later someone is going to get there.

So as practitioners of defensive cyber, what should we be doing to prepare?

How we can prepare

This post is not marketing material, and I’ve tried my hardest to avoid calling out specific companies or products. Regardless, I’m coordinating research at a defensive cyber organisation and this post wouldn’t be complete without covering what we’re thinking about.

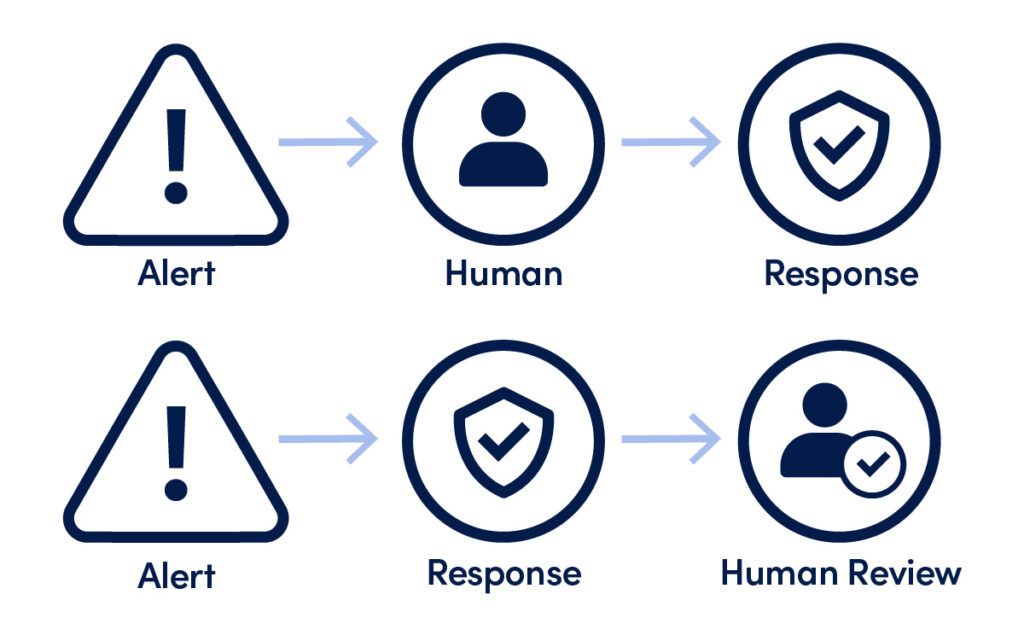

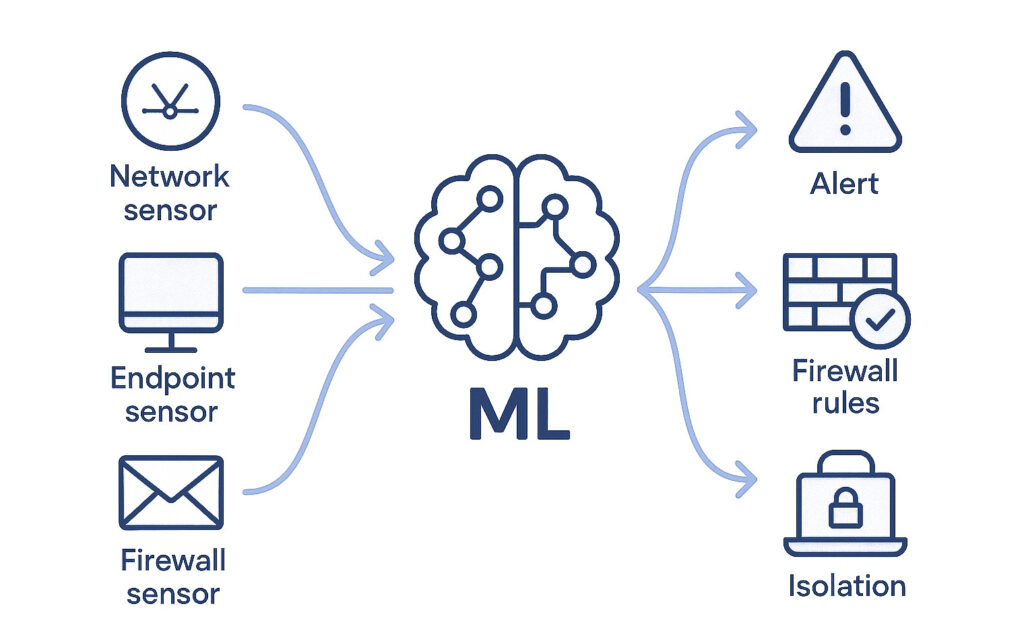

Fundamentally I think our approach to defensive cyber is going to have to change. To stop lightning fast attacks we need to deploy equally speedy automated defences. Most organisations implementing real-time cyber defences still rely on Alert -> Human -> Action, and are cautious about automating remediation that may disrupt service, such as isolating hosts, locking accounts, or changing firewall rules. This process will have to normalise Alert -> Action -> Human review. I know many of us are already doing this to a limited extent so it’s not a completely new mindset, but most organisations are rightly resistant to allowing too much automated lockdown.

Flagging and blocking suspicious activity is easy, the problem comes with false positives. Each false positive causes some level of disruption, and if it is too great then the cure becomes worse than the disease. There are two ways we can deal with this.

First, drive the rate of false positives down. That’s fundamentally a problem of precision, and it’s where much of the energy in automated defences is being spent today. Many existing defensive cyber products that claim AI integration are really using procedural rules and risk scoring rather than genuine machine learning classification, and that approach is unlikely to scale with adaptive automated attacks. Only by integrating genuine AI/ML models into our defensive stack (both detection and response) can we hope to match their speed.

Second, make the false positives less painful when they occur with soft containment. Accept that mistakes will happen, and the goal becomes reducing their damage to users. This means an increasing reliance on techniques like sandboxing remediations, quarantining a host in a way that’s easily reversible, or limiting a user without locking their account outright.

We need to get better at linking different information sources together and running probabilistic models of our own over them to identify anomalies. As a penetration tester it makes absolutely no sense that I can launch thousands of fraudulent Kerberos requests at a Domain Controller and it will honour them all. Luckily this is what AI excels at and was being used for long before LLMs generated the current buzz.

Areas controlling identity (AD, SSO, IDaaS, CASB, etc.) need to interact with our other real time defences: EDR, NDR, IDS/IPS, firewalls, FIM, DNS, web and email filtering, etc. Coordinating this data within a SIEM is thankfully becoming standard practice, but while SOAR allows powerful automation it is typically predefined using static rules. Some NDR platforms do this to an extent, but we need models that can run real-time anomaly detection over whatever set of technologies a specific environment is using.

To prepare for the coming wave we must increase our speed of response to as near instantaneous as possible, accepting and minimising occasional disruption. As attack speed increases with automation we lose the window of opportunity that we currently depend on to respond. We must use the grace period that we have to make sure defensive automation is in place and well established before offensive automation tears our networks apart.

The rise of offensive AI is a looming inevitability that isn’t receiving the attention it deserves. We’re standing at the edge of a transformation that will redefine our entire industry. Defensive teams must shift from reactive to proactive, from manual to automated, and from siloed to integrated. The grace period we’re in won’t last forever, and when it ends the organisations that have invested in intelligent, adaptive defences will be the ones left standing. The question isn’t whether offensive AI will arrive, but whether we’ll be ready when it does.

WRITTEN BY:

Dr. Oliver Farnan, Head of Research, Reliance Cyber

Dr. Oliver Farnan has expertise in offensive security, advanced threats, and nation-state cyber policy. With a rich background in cybersecurity, Oliver has held diverse roles, including as an officer in the Royal Signals, a postdoctoral research position at Oxford University, and as the Global Head of Information Security for the INGO Oxfam. Holding a PhD in Cybersecurity, Oliver combines academic research with practical security operations, empowering organisations to effectively navigate emerging cyber threats.

About Reliance Cyber :

Reliance Cyber delivers world class cybersecurity services tailored to the unique needs of our customers. With extensive in-house expertise and advanced technology, we protect organisations across a wide range of sectors — from enterprise to government —against the most sophisticated threats, including those from nation-state actors. Our teams safeguard critical assets, people, data, and reputations, allowing customers to focus on their core business objectives with confidence.

Get in touch with our experts

+44 (0)845 519 2946

contact@reliancecyber.com